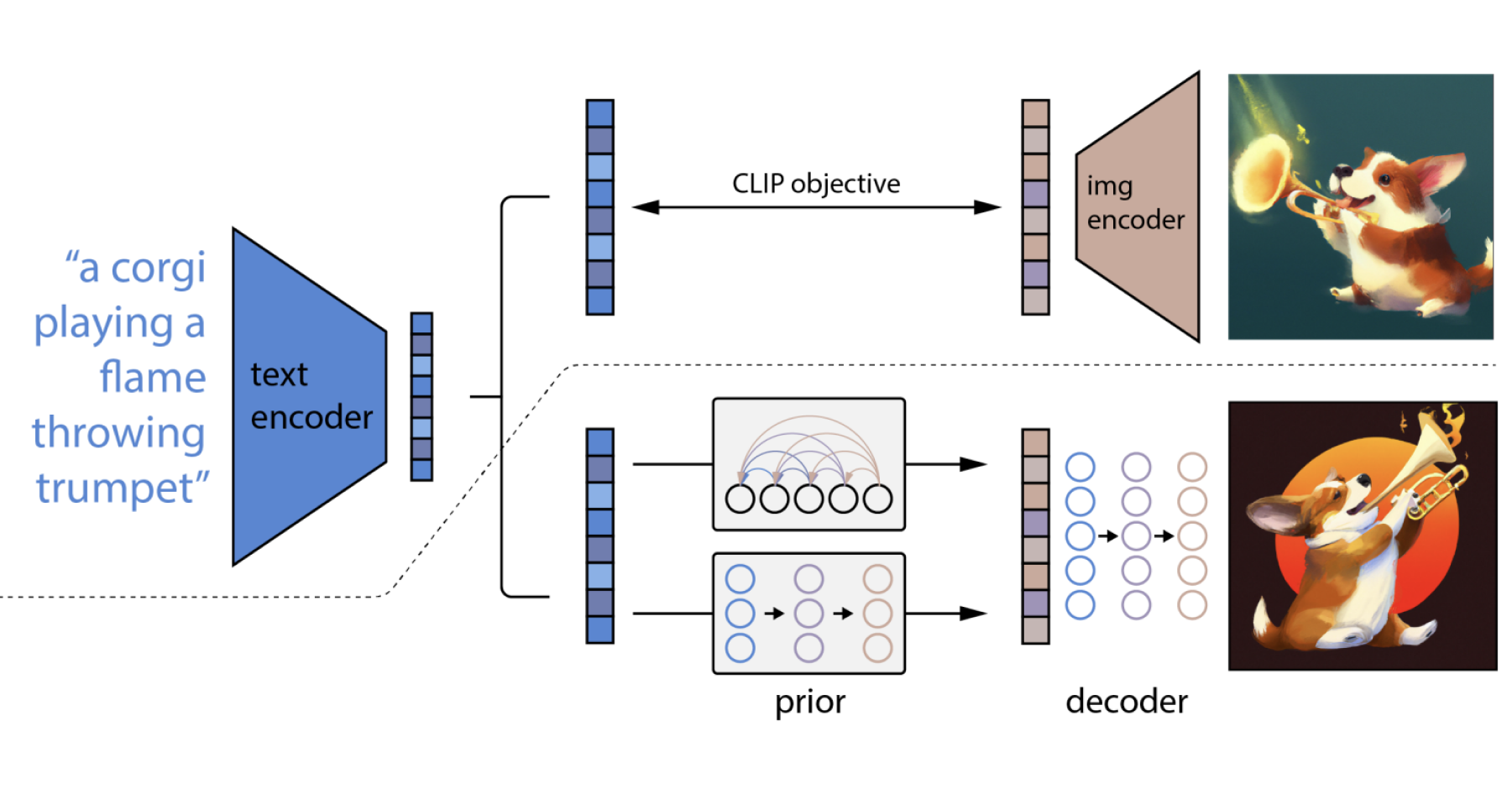

OpenAI's unCLIP Text-to-Image System Leverages Contrastive and Diffusion Models to Achieve SOTA Performance | Synced

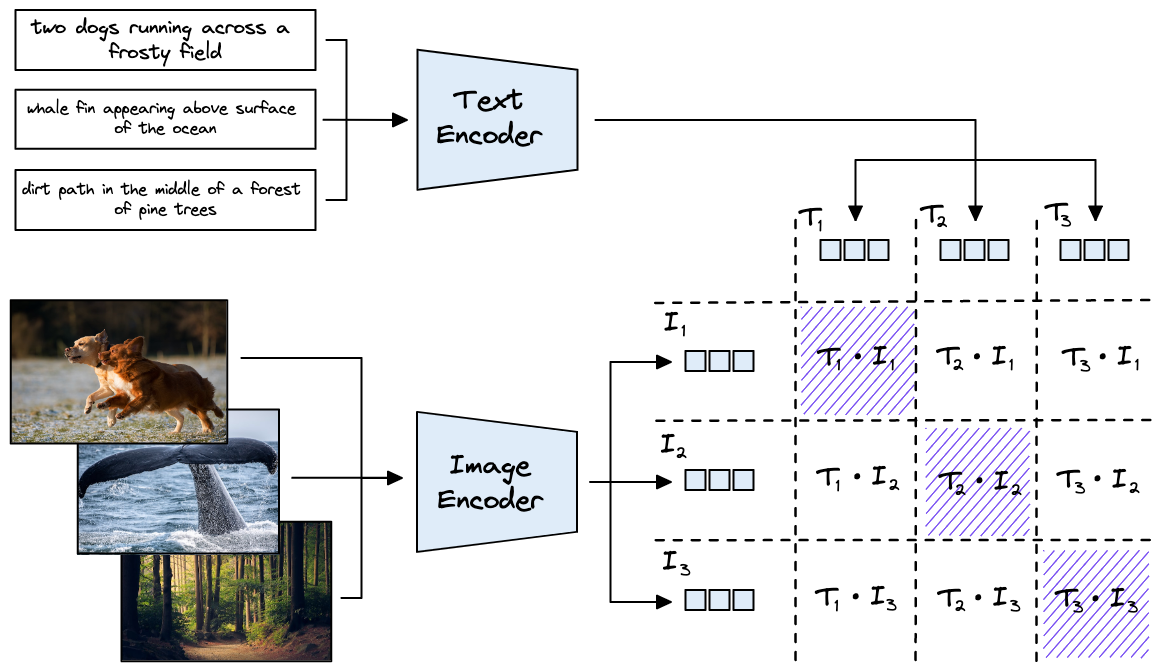

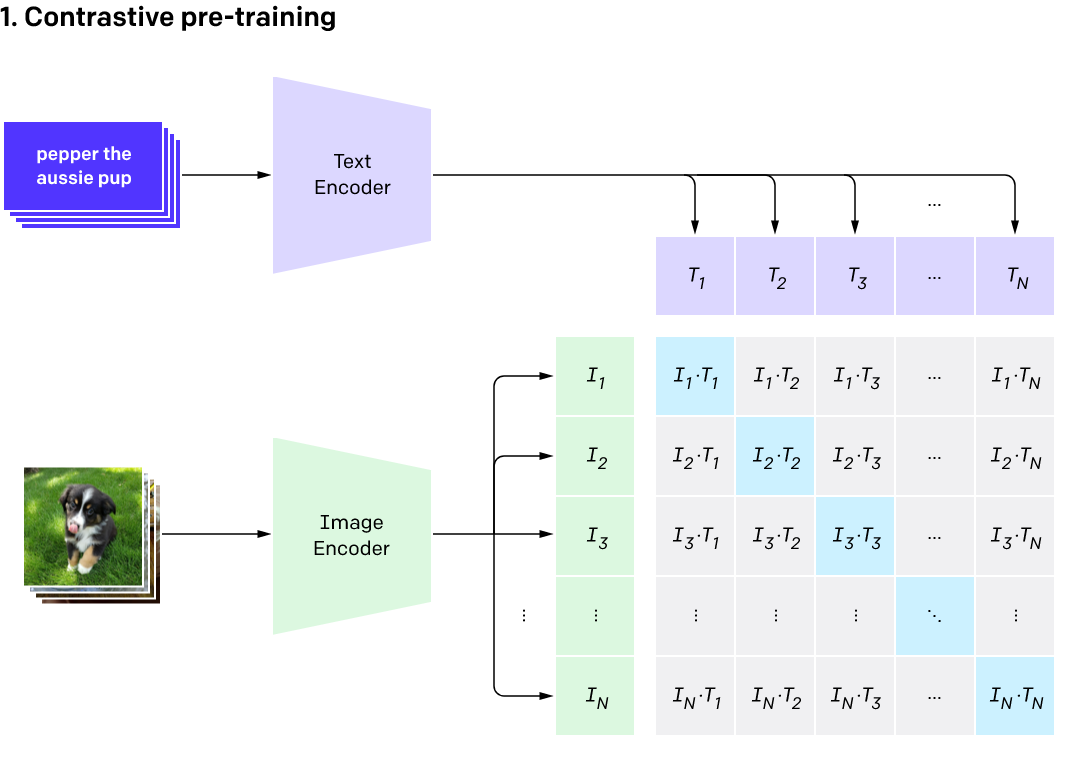

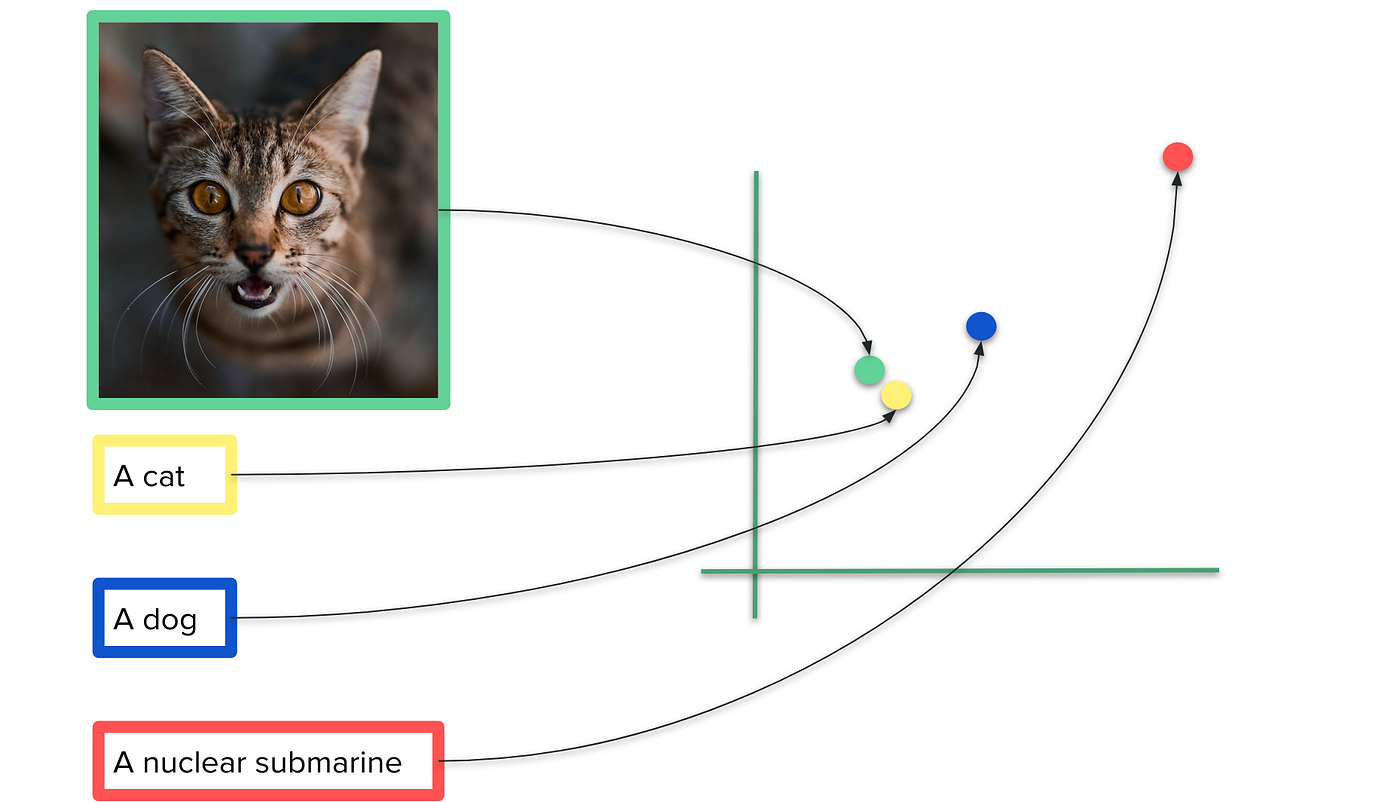

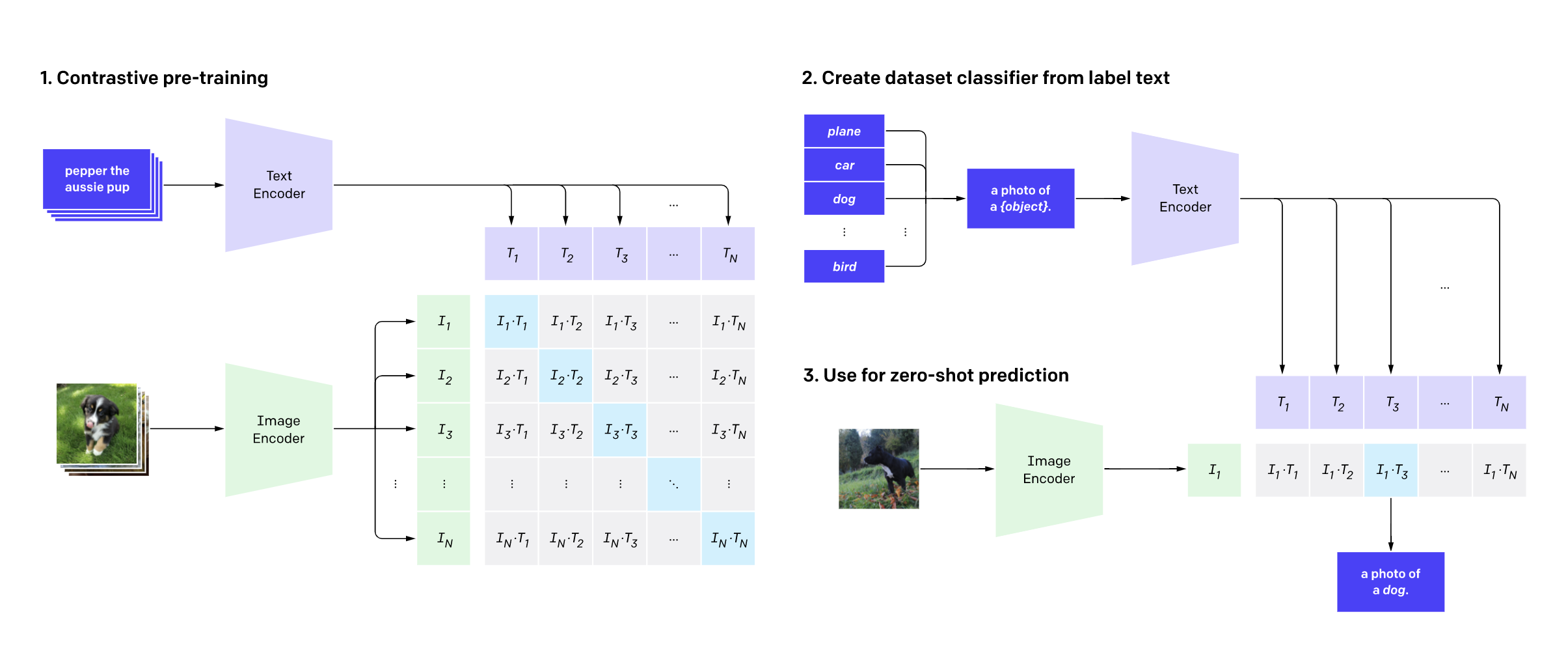

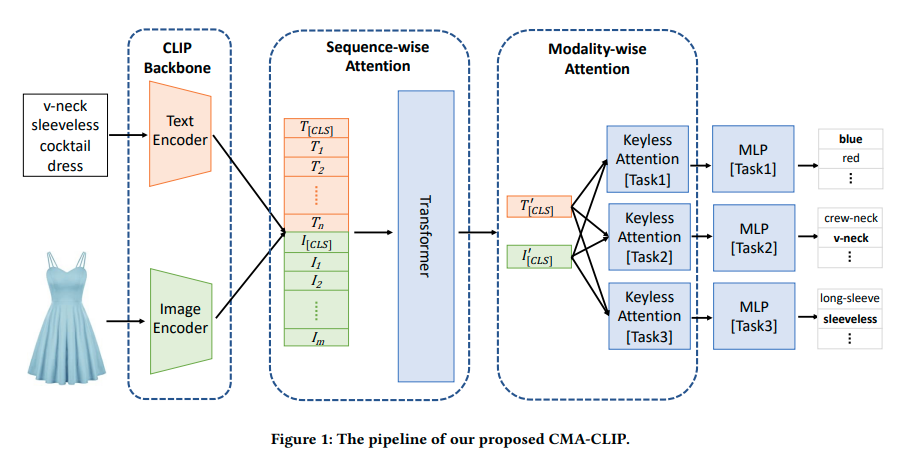

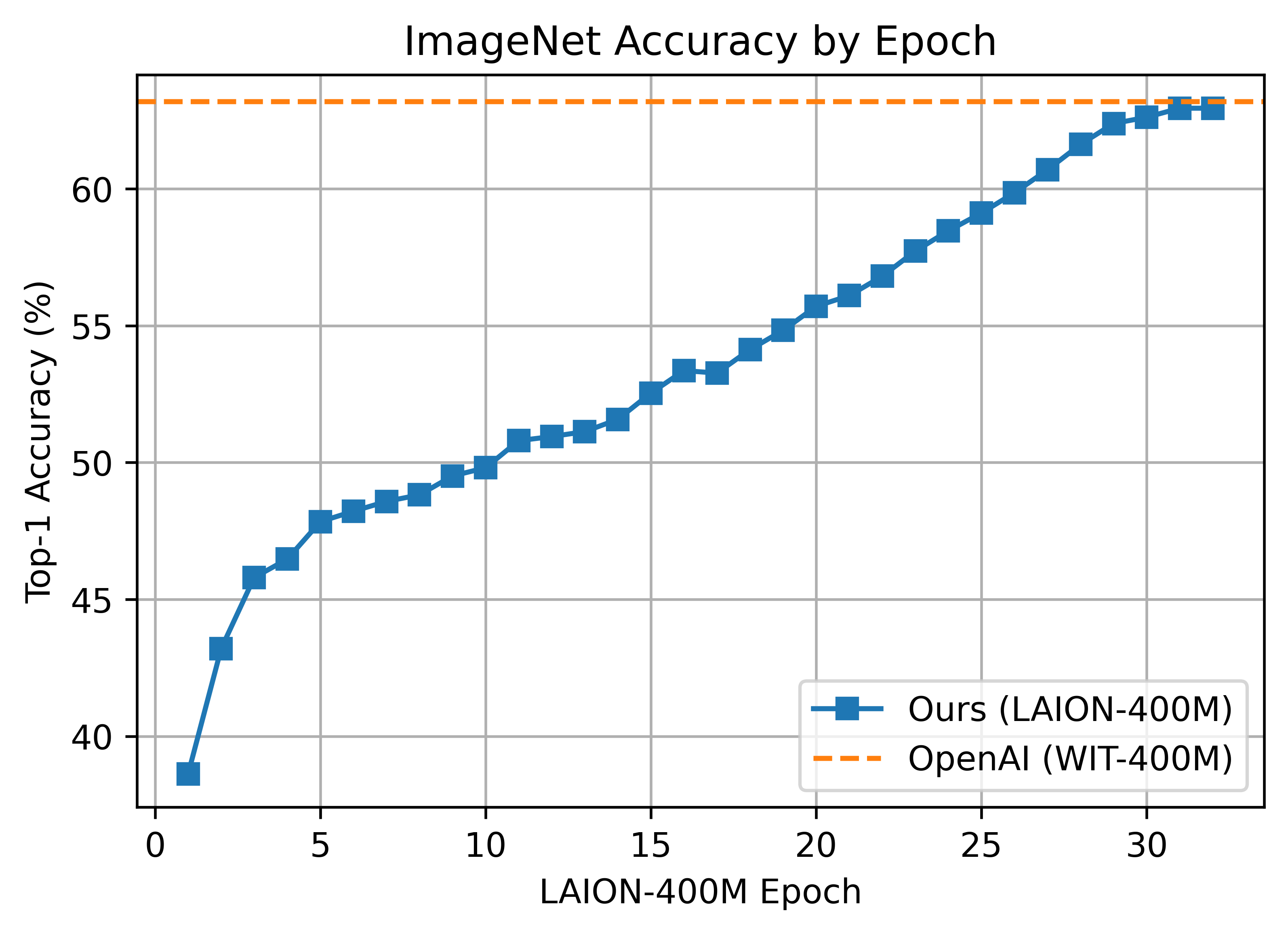

Process diagram of the CLIP model for our task. This figure is created... | Download Scientific Diagram

Meet CLIPDraw: Text-to-Drawing Synthesis via Language-Image Encoders Without Model Training | Synced

Collaborative Learning in Practice (CLiP) in a London maternity ward-a qualitative pilot study - ScienceDirect

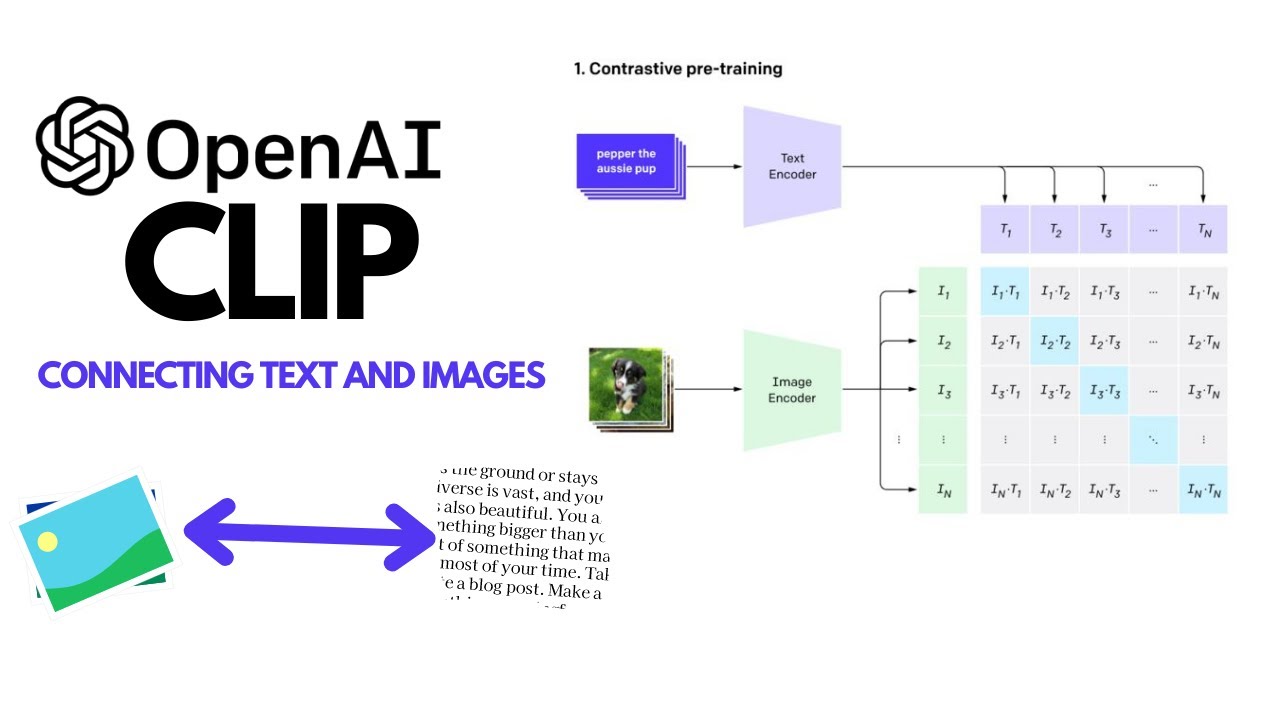

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning - YouTube

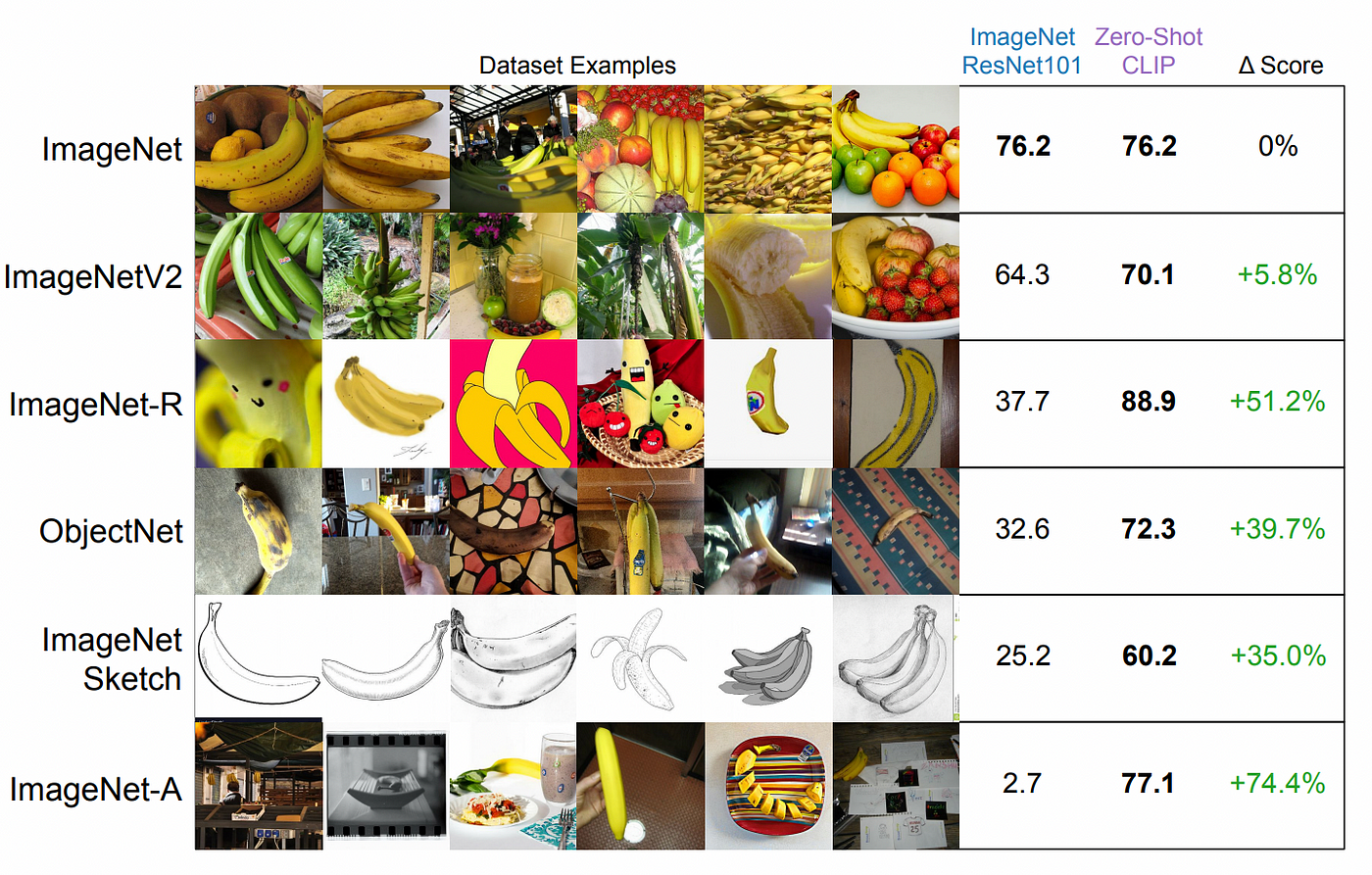

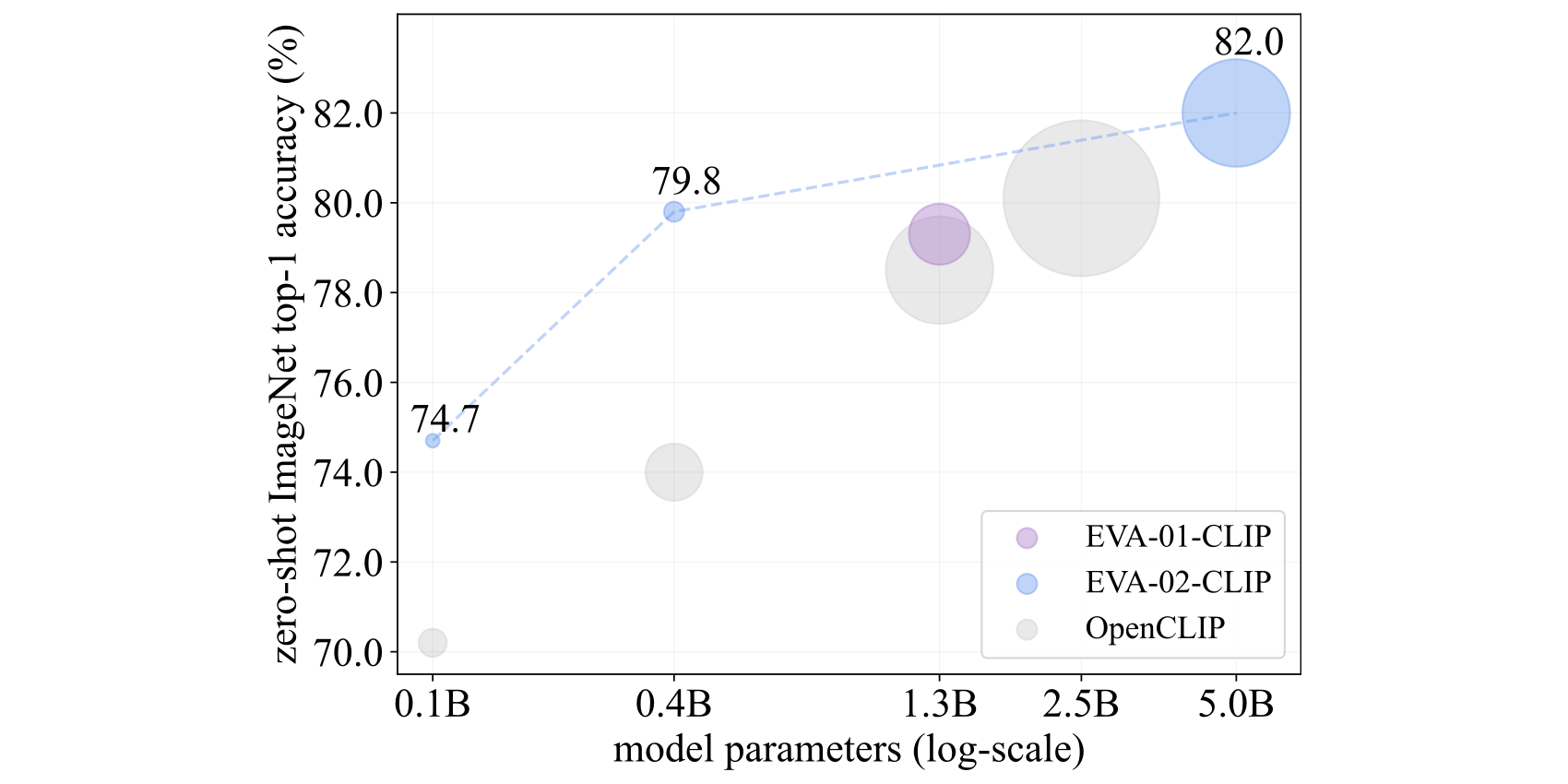

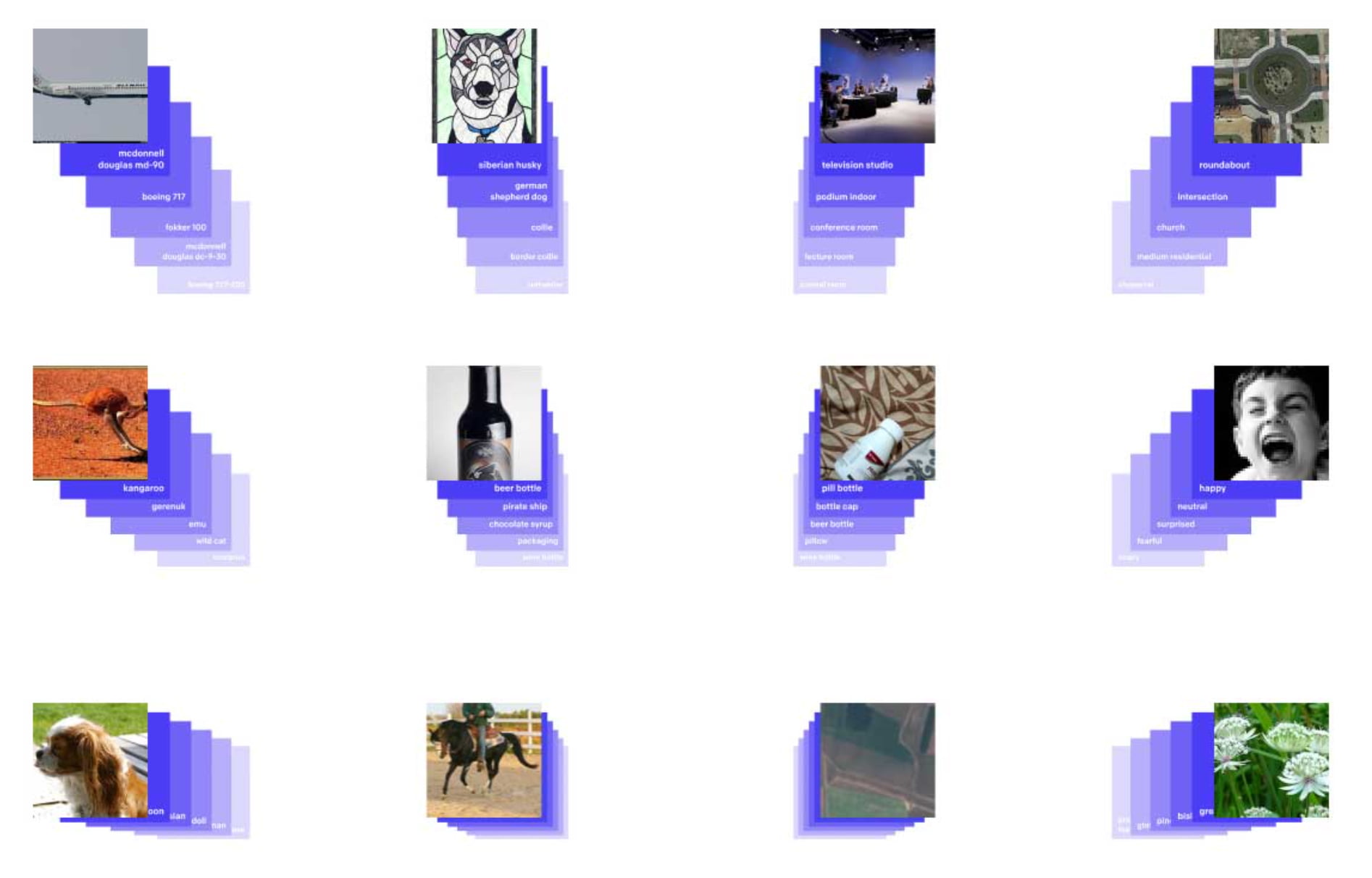

Implement unified text and image search with a CLIP model using Amazon SageMaker and Amazon OpenSearch Service | AWS Machine Learning Blog

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image